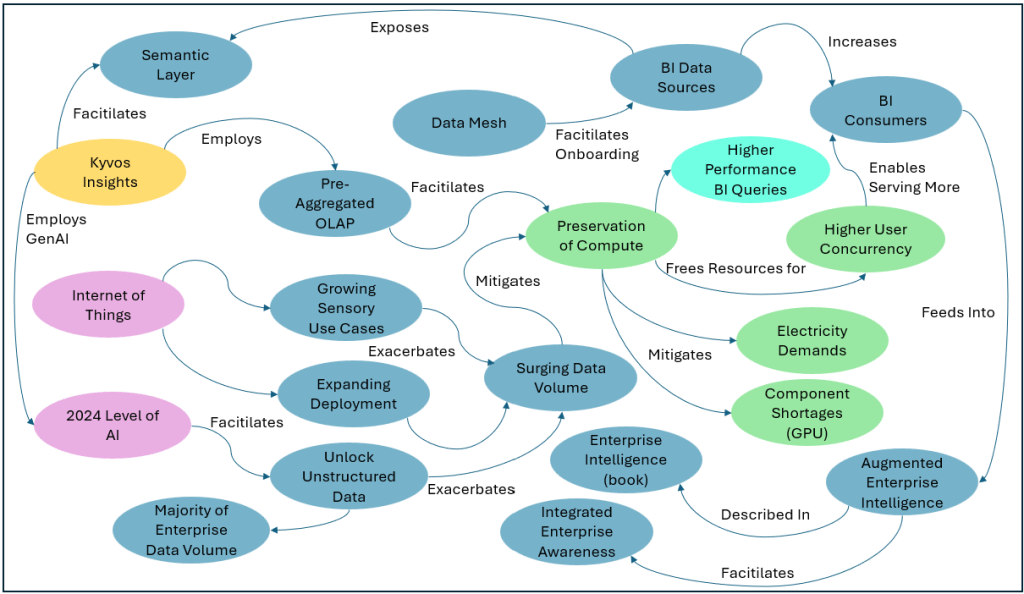

In this time of AI and IoT, the sheer volume of data is surging beyond merely massive towards overwhelming. Compute resources are limited, and “throwing more iron at the problem”—whether on-premise or scaling in the cloud—eventually doesn’t work anymore.

Sure, Moore’s Law followed by scaling the cloud has kept performance “good enough” for the past few decade. But that approach was a temporary bandage. It’s like trying to outrun a flood by throwing more sandbags at it—eventually, the water blasts through.

Volume of Data vs. Ability to Handle that Volume

A major use for today’s great-but-not-that-great level of AI is to extract information from massive volumes of unstructured data. Unstructured data such as photos, audio, and video generally comprise the majority of the data volume of enterprises. That represents a potential flash flood of analytically valuable data that has been trapped in what is typically tens of TBs of data in a typical enterprise.

When anything surges, at best, it tests our capability, but it can also be devasting. When data volume surges, it’s going to outpace today’s hardware. When that moment comes, pre-aggregated OLAP like your retention basin. The problem? It’s always underappreciated until hardware is too expensive or just otherwise unable to handle a surging volume of data. Then poor performance moves beyond annoying.

Preservation of Compute

Caching of all forms (ex. Web page caching, RAM, L1/L2 cache on CPUs) is about preserving compute resources. Pre-aggregated OLAP is a form of cache. At its core, pre-aggregated OLAP serves as an optimization of the simple slice-and-dice (GROUP BY) operation. It’s a “process once, read many times” handing of data. It preserves precious compute power—resources you can’t afford to waste—allowing for much higher concurrency and/or conservation of our precious compute resources.

High Performance BI Queries

Kyvos’ pre-aggregated OLAP is a relatively simple, highly-effective step towards protection against data volume surges. Stop relying on scaling up indefinitely. Instead, get ahead of the curve by optimizing your data infrastructure today. Pre-aggregation is a proven way to handle the AI and IoT tidal wave while mitigating burning through all our compute resources.

BI Consumers are More Than Analysts and Managers

Today’s BI information is valuable to more than a handful of analysts. Information across all reaches of the enterprise is needed. Data Mesh will facilitate the onboarding of those previously neglected domains. But with more integrated BI comes more BI consumers. With Kyvos’ pre-aggregated OLAP, the preservation of compute could be used to facilitate higher concurrency of the growing number of BI consumers.

Capturing the BI Insights of Thousands of BI Consumers

When BI consumers view a BI query through visualization features of tools such as Tableau and Power BI, there are well-known characteristics of those visualizations that they look for. Those characteristics are insights they can use towards making better decisions. Those insights, whether noticed or not-noticed by an analyst could be saved in a centralized Insight Space Graph.

Those insights can be passively captured from the normal activity of thousands of BI users across all corners of the enterprise. All BI consumers would benefit from those insights collected in that diversely distributed manner. The Insight Space Graph is itself a cache—a cache of insights in turn built from a cache of pre-aggregated OLAP data.

The creation of a centralized insight database is the subject of my book, Enterprise Intelligence. Enterprise Intelligence is available at Technics Publications and Amazon. If you purchase the book from the Technics Publications site, use the coupon code TP25 for a 25% discount off most items (as of July 10, 2024).

Booming AI Demands on Electricity

Finally, with AI and IoT promises to surge data volume in orders of magnitude over the coming few years, there is already the awareness of questioning where the required electricity will come from. Like any other resource, conversation of resources is always a good practice. This goes for all other data center components prone to shortages, such as GPUs. Building all these data centers and consuming more energy in an environment already pushing its limits kind of reminds me of the wanton California Gold Rush practice of hydraulic mining.