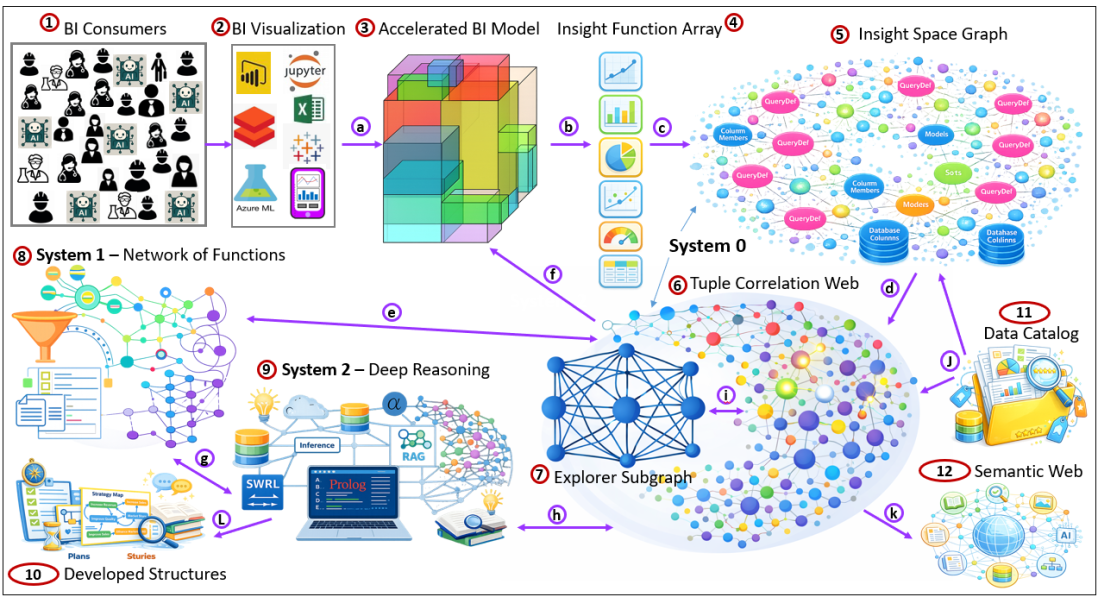

Abstract In The Assemblage of AI architecture, System 2 is modeled after the deliberate reasoning processes described in human cognition. But rather than focusing on psychology, this article examines what System 2 produces inside an AGI system. The outputs are not merely answers to questions but structured artifacts such as stories, plans, procedures, and models. … Continue reading The Products of System 2 – Prolog in the LLM Era – Springbreak 2026 Special

An Interlude Before the Third Act of “The Assemblage of AI”

In the conclusion of my last blog, Chains of Unstable Correlations, I wrote: When you work around experts long enough, you notice that experts sometimes (usually?) forget what isn’t obvious to non-experts. Conversely, people in the field often see things experts overlook because they live inside the operational texture of the system. After a conversation … Continue reading An Interlude Before the Third Act of “The Assemblage of AI”

Chains of Unstable Correlations

Introduction Like it or not, competition is arguably the biggest force affecting how things in our world work, in terms of both evolution and society. Without competition among genes, at the least, life on Earth wouldn't be as it is today. And for better or worse, societal competition at various levels (individual, tribes/cities, countries) wouldn't … Continue reading Chains of Unstable Correlations

Explorer Subgraph—The Dynamic Cartography of Relation Space

Abstract This blog introduces the Explorer Subgraph, a navigational knowledge structure designed to support System ⅈ (the ⅈ as in the imaginary number). I introduced System ⅈ a few weeks ago in my blog, System ⅈ: The Default Mode Network of AGI. The Explorer Subgraph is a background, always-on layer of intelligence that operates below conscious … Continue reading Explorer Subgraph—The Dynamic Cartography of Relation Space

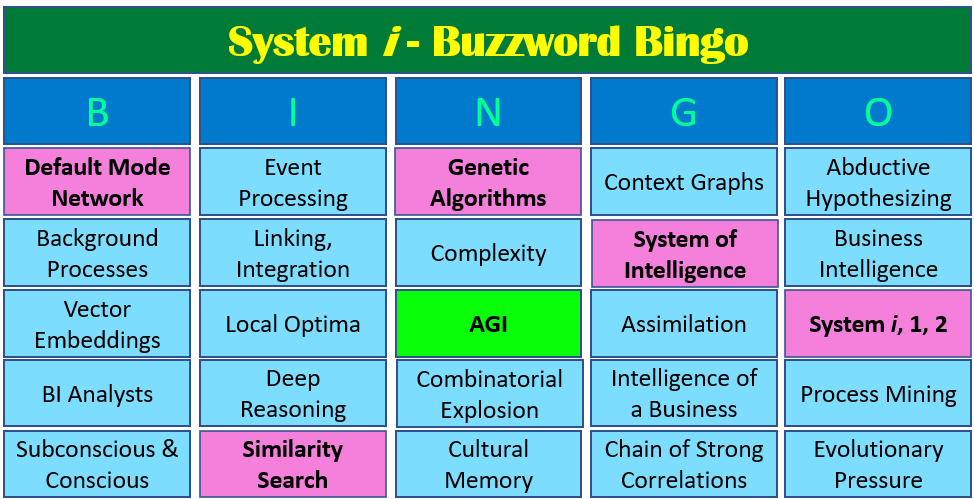

System ⅈ: The Default Mode Network of AGI

Abstract: TL;DR Preface—The System ⅈ Origin Story For my AI projects back in 2004, SCL and TOSN, the main architectural concept centered on how I imagined our conscious and subconscious work. In my notes, I referred to the conscious as the "single-threaded consciousness". The single-threaded consciousness made sense to me because we have just one … Continue reading System ⅈ: The Default Mode Network of AGI

The Complex Game of Planning

This is my final post for 2025, just in time for your holiday-season reading, ideally from a comfy chair next to the fireplace. It's food for thought for your 2026 New Year resolutions. So toss out that tired old "'Twas the Night Before Christmas" and read this new tale to the kids and grandkids. ✨🎄🔥📚☕❄️🎁 … Continue reading The Complex Game of Planning

Conditional Trade-Off Graphs – Prolog in the LLM Era – AI 3rd Anniversary Special

Skip Intro. 🎉 Welcome to the AI “Go-to-Market” 3rd Anniversary Special!! 🎉 Starring ... 🌐 The Semantic Web ⚙️ Event Processing 📊 Machine Learning 🌀 Vibe Coding 🦕 Prolog … and your host … 🤖 ChatGPT!!! Following is ChatGPT 5's self-written, unedited, introduction monologue—in a Johnny Carson style. Please do keep reading because this blog … Continue reading Conditional Trade-Off Graphs – Prolog in the LLM Era – AI 3rd Anniversary Special

Long Live LLMs! The Central Knowledge System of Analogy

Situation Over the past few months there has been a trend to move from referring to "large language models" (LLM) to just "language models". The reason for that is the recognition that small language models (SLM) have clear advantages under certain situations. So "language model" is inclusive of both. And of course, as soon as … Continue reading Long Live LLMs! The Central Knowledge System of Analogy

Context Engineering and My Two Books

“Context engineering” is emerging as the evolutionary step over prompt engineering. It's the deliberate design of everything an AI system can access before it produces an answer. The goal is to make it work on the right problem, with the right facts, under the right constraints. That is, by mitigating "context drift" as the AI … Continue reading Context Engineering and My Two Books

Stories are the Transactional Unit of Human-Level Intelligence

The transactional unit of meaningful human to human communication is a story. It's the incredibly versatile, somewhat scalable unit by which we teach each other meaningful experiences. Our brains recorded stories well before any hints of our ability to draw and write. We sit around a table or campfire sharing stories, not mere facts. We … Continue reading Stories are the Transactional Unit of Human-Level Intelligence