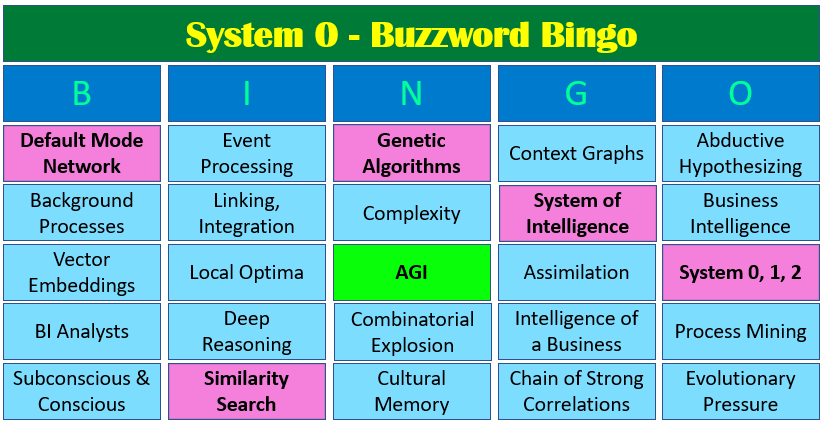

TL;DR Preface—The System 0 Origin Story For my AI projects back in 2004, SCL and TOSN, the main architectural concept centered on how I imagined our conscious and subconscious work. In my notes, I referred to the conscious as the "single-threaded consciousness". The single-threaded consciousness made sense to me because we have just one body, … Continue reading System 0: The Default Mode Network of AGI

Tag: nollm

The Complex Game of Planning

This is my final post for 2025, just in time for your holiday-season reading, ideally from a comfy chair next to the fireplace. It's food for thought for your 2026 New Year resolutions. So toss out that tired old "'Twas the Night Before Christmas" and read this new tale to the kids and grandkids. ✨🎄🔥📚☕❄️🎁 … Continue reading The Complex Game of Planning

Conditional Trade-Off Graphs – Prolog in the LLM Era – AI 3rd Anniversary Special

Skip Intro. 🎉 Welcome to the AI “Go-to-Market” 3rd Anniversary Special!! 🎉 Starring ... 🌐 The Semantic Web ⚙️ Event Processing 📊 Machine Learning 🌀 Vibe Coding 🦕 Prolog … and your host … 🤖 ChatGPT!!! Following is ChatGPT 5's self-written, unedited, introduction monologue—in a Johnny Carson style. Please do keep reading because this blog … Continue reading Conditional Trade-Off Graphs – Prolog in the LLM Era – AI 3rd Anniversary Special

Long Live LLMs! The Central Knowledge System of Analogy

Situation Over the past few months there has been a trend to move from referring to "large language models" (LLM) to just "language models". The reason for that is the recognition that small language models (SLM) have clear advantages under certain situations. So "language model" is inclusive of both. And of course, as soon as … Continue reading Long Live LLMs! The Central Knowledge System of Analogy

Stories are the Transactional Unit of Human-Level Intelligence

The transactional unit of meaningful human to human communication is a story. It's the incredibly versatile, somewhat scalable unit by which we teach each other meaningful experiences. Our brains recorded stories well before any hints of our ability to draw and write. We sit around a table or campfire sharing stories, not mere facts. We … Continue reading Stories are the Transactional Unit of Human-Level Intelligence

Reptile Intelligence: An AI Summer for CEP

Complex Event Processing AI doesn’t emerge from a hodgepodge of single-function models—a decision tree that predicts churn, or a neural net that classifies an image, which only see one quality of the world. The AGI-level intelligence that's being frantically chased requires massive, organized integration of learned rules. That is, a large, heterogenous number of composed … Continue reading Reptile Intelligence: An AI Summer for CEP

Embedding Machine Learning Models into Knowledge Graphs

Think about the usual depiction of a network of brain neurons. It’s almost always shown as a sprawling, kind of amorphous web, with no real structure or organization—just a big ball of connected neurons (like the Griswold Christmas lights). But this image misses so much of what makes the brain remarkable. Neurons aren’t just randomly … Continue reading Embedding Machine Learning Models into Knowledge Graphs

Playing with Prolog – Prolog’s Role in the LLM Era, Part 3

This blog series explores the synergy between Prolog's deterministic rules and Large Language Models (LLMs). Part 1 and 2 set the stage and discussed using Prolog alongside LLMs and Knowledge Graphs. A practical use case demonstrates how Prolog, aided by LLMs, can make meaningful contributions to AI systems. The series hopes to reignite interest in Prolog.

Prolog AI Agents – Prolog’s Role in the LLM Era, Part 2

Prolog, discussed in my "Prolog's Role in the LLM Era - Part 2" blog, offers transparent, clear rules for decision-making. This contrasts with the complexity of neural network models. Fusing fuzzy LLMs with Prolog's determinism creates a stable, reliable AI system. As technology advances, this partnership will optimize efficiency and reliability in decision-making systems.

Prolog’s Role in the LLM Era – Part 1

The content is a comprehensive exploration of the relationship between Prolog and LLMs (Large Language Models), detailing their differences, strengths, and potential synergies in the field of AI. It discusses the distinctive attributes of Prolog as a deterministic knowledge base and its contrast with LLMs, illustrating scenarios where each excels. The content also delves into the potential of ChatGPT to assist in authoring Prolog and creating logical, methodical systems. Additionally, it references the emergence of the GPT Store, as a manifestation of the original idea of "separation of logic and procedure". The overall tone of the content is informative, educational, and forward-thinking, and it serves as a valuable resource for understanding the intersection of traditional logic-based AI and modern LLMs.